Pushing Frontier AI to Its Limits

My last post was more than 14 months ago. Right around the time when the LLM hype exploded, AI workflows, AI agents, ... I stayed silent for a while busy watching everyone blowing mind about how LLMs could solve LeetCode problems to 80% hard problem, or how RAG could change how traditional chatbots work. People with ML backgrounds didn't quite accept that AI building is now just OpenAI API integration - something any developer can do. The beauty of data science used to lie in playing with data, feature engineering, model tuning, etc.

But then so many new AI applications became useful. New techniques, new "tasks" emerged around it. Prompt engineering, token optimization, creating MCPs for existing apps, tool calling, etc. The models just got so much better - we gave them tools to push their capacity beyond pure reasoning. People used to complain about LLMs hallucinating on outdated data. Now LLMs without web search or reasoning or MCPs are just ... weird. Grok is about can giving you real-time answers with up to information what just happened, Claude will try to run Python code to give you a complex math solution as possible.

You and I can't ignore that anymore. I started building from small stuff, creating UDF calling OpenAI to process a pandas dataset, building an MCP on top of ClickHouse, started using AI agents and building things more seriously. There are thousands of models out there now, from large to small, closed to open weight. The coding agents now really good I could say. I built LLM workflows, played with MCP, deployed vector database, RAG, etc.

Coding agents control the terminal. I'm not writing code or even reading it - I'm watching them work instead. I test their results, tell them what I expect tests to look like to keep them focused, and build skills to teach them specific tasks. This is the new normal, I guess.

I've tried over a hundred models and tools in the past year: GitHub Copilot from the early days, Tabnine, v0.dev, Codex, Claude Code, Cursor, Windsurf, opencode, n8n + AI Agent node, code review tools like CodeRabbit, Greptile, Sourcery, etc. dozens of models from gpt-4o, Claude, Gemini, Grok, Mistral, DeepSeek, Qwen, MiniMax, GLM, etc. Both free and paid. I can't tell you which one is "the best" because they'll be legacy by next week. When choosing a framework for AI applications, there are tons of options: LangChain, LangGraph, OpenAI Agents SDK, then Claude Agent SDK came along and was better, Cloudflare Agents, Vercel AI SDK. The competition never ends. Maybe 90% of AI projects are just wrapping LLM APIs - most don't ship anything real. A few stand out, some become worth millions and turn into the next big thing, but most of them are just demos or POCs. I have no idea.

While people are still scared of vibe coding, I ship it to production. For me, AI agents are no longer just tools for learning or asking questions about your codebase - they're fully capable of producing production-grade code if you plug them into the right tools and give them good instructions. My top language on WakaTime is now markdown, damn. Things change fast. Your model gets stuck today, but tomorrow someone releases something better. You have an idea, someone builds a product around it, and it gets killed or goes legacy some random morning.

I didn't stop writing, tons of drafts in my obsidian, none published because they became outdated before I could finish them. I want to kick off this first 2026 post as my digital garden - a place to reflect on what I'm thinking and doing in this LLM era. This post will be updated from time to time.

Top on my list

Updated Jan 2026

Claude Code

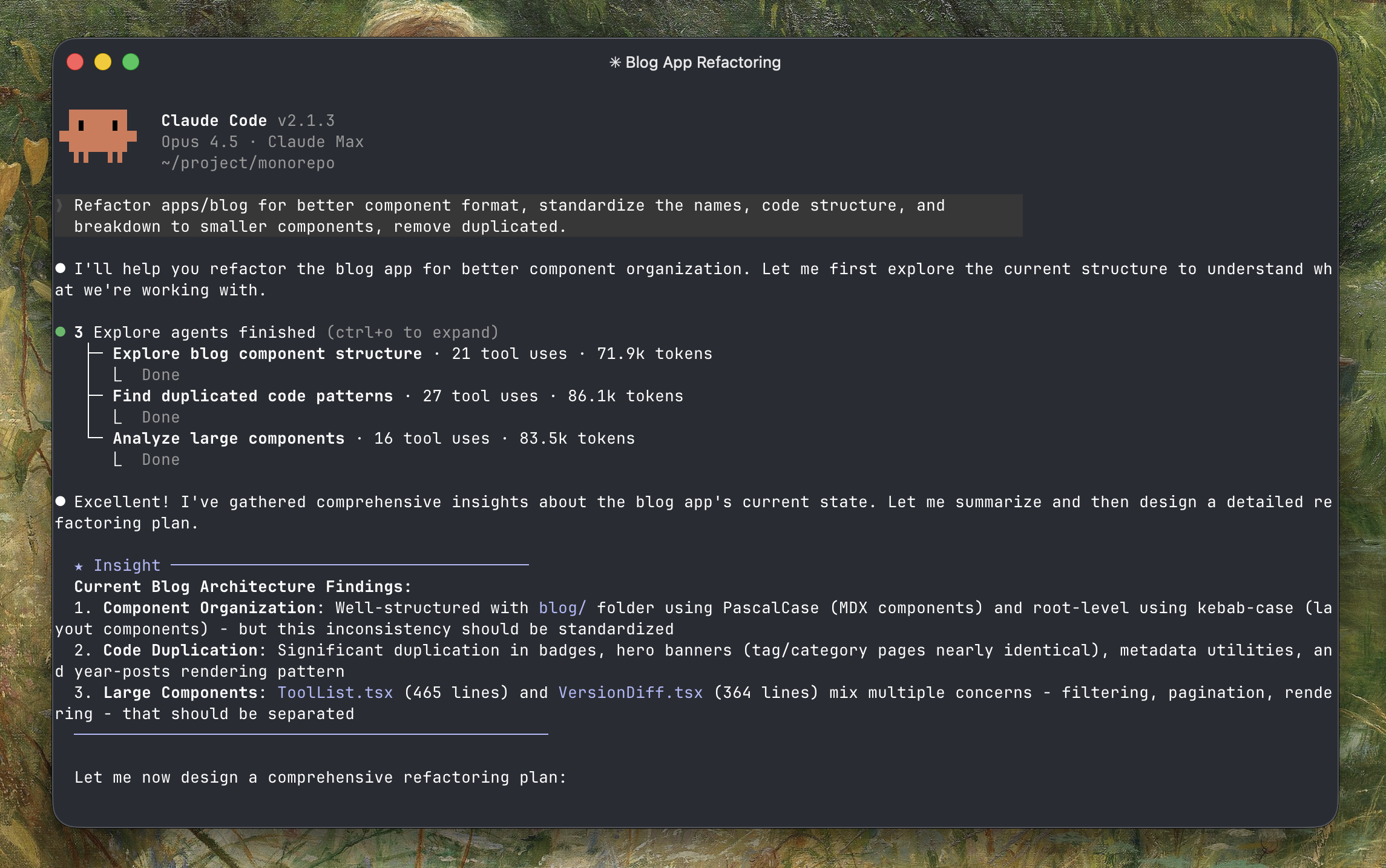

Claude Code is still the king among all the coding agents I've tried. I've used Cursor, Codex, Antigravity, Gemini CLI, Droid, Roo Code, Kilo Code, Kiro, etc. None of them can beat Claude Code in my opinion. But I suggest you try all of them if you can - use a different one for each side project.

It just works - not only for coding, but for understanding complex systems, refactoring, writing docs, doing homework, planning travel, summarizing news, fixing your system, etc. "90% of code in Claude Code is written by itself" - How Claude Code is built. It's a general-purpose AI agent. Interestingly, it wasn't originally designed for coding. It started as Boris's side project.

The idea for Claude Code came from a command-line tool that used Claude to display what music an engineer was listening to at work. It spread like wildfire at Anthropic after being given access to the filesystem. Today, Claude Code has its own fully-fledged team

The shift from Copilot or Cursor (back in early 2025) to coding agents is like going from autocomplete to having other developers on your team. It's more like having teammates who do their own work, not a pair programmer grabbing your keyboard. They work on their own - I just review results, give feedback when asked, and honestly still can't believe this works. Your mindset changes from "I need to write good code" to "I need to write good prompts and build good skills". Most code in my GitHub repos is now generated without me writing a single line. I just prompt, watch, and test.

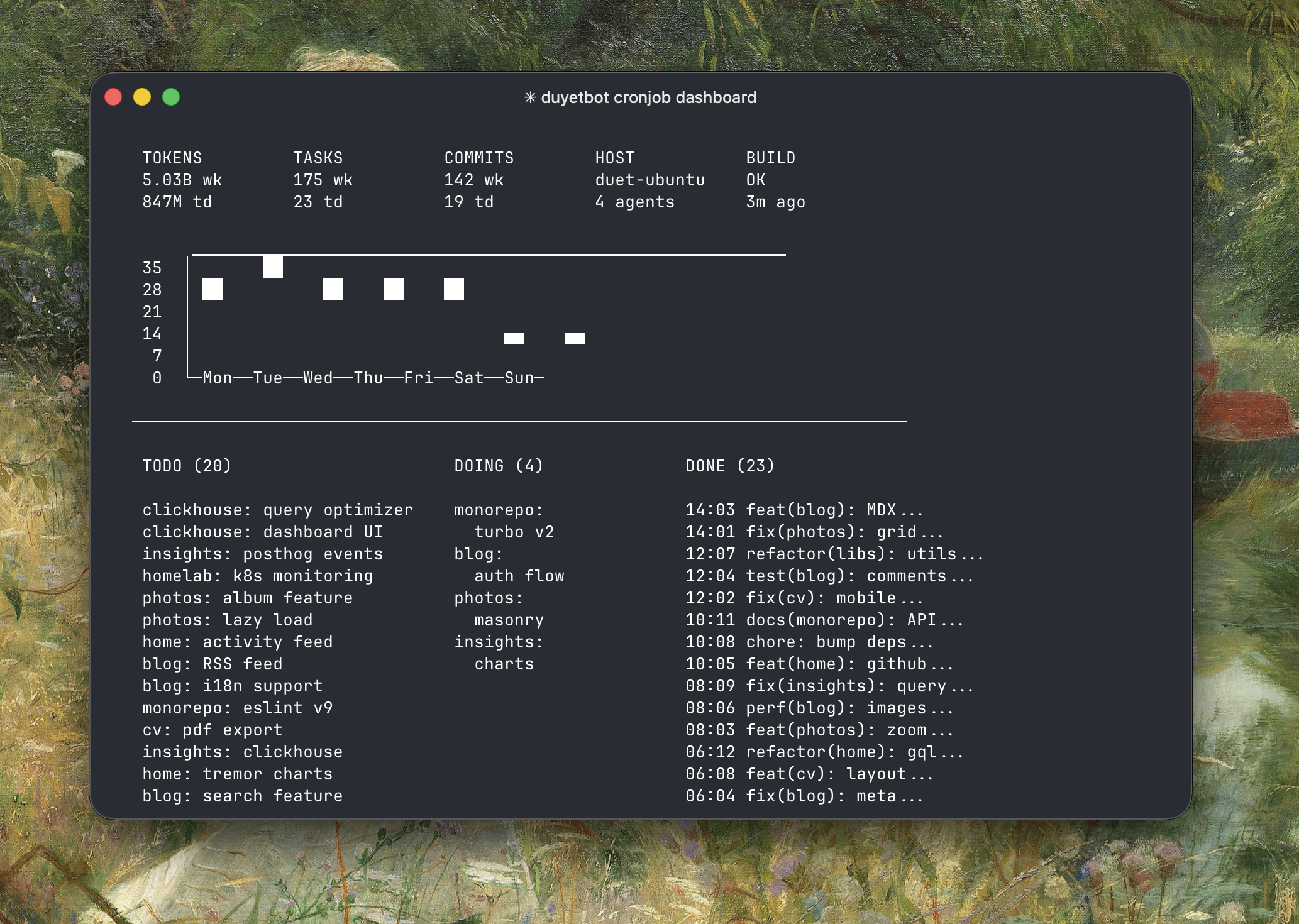

duyet.net gets updated automatically by Claude Code overnight with a custom Claude wrapper - my experiment to see how far Claude Code can go. Sometimes it researches new designs, sometimes it breaks the website, but it's fun to see. The script looks something like this:

The prompt.md file contains the task list and instructions. Claude reads it, executes, and updates the state for each loop. For more advanced use cases, check out Claude Code + Ralph Loop - it runs non-stop sessions that consume tasks while you can prompt it to read state or a TODO.md file on the fly.

There's no one correct way to use Claude Code. The following sections are for anyone curious about how I use it - skip this if you're already familiar with Claude Code.

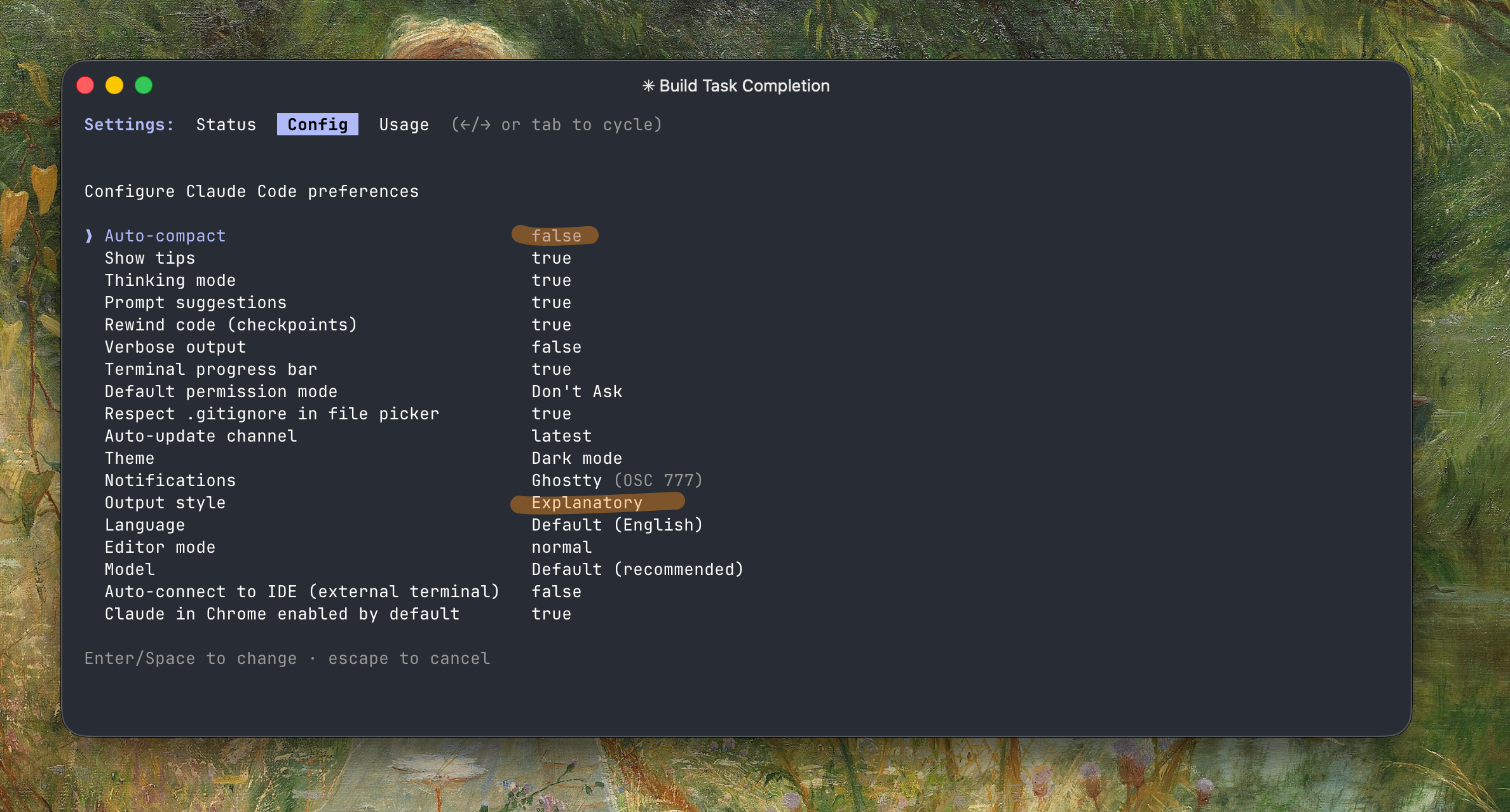

Claude Code Setup

I prefer disabling Auto-compact - it's slow, wastes 45.0k tokens (22.5%) for the buffer, and usually loses context. I use sub-agents when possible since they have their own context. Otherwise I run /export to the clipboard, then /clear and paste the previous content back. The export won't include thinking tokens or tool calls, so you save a lot and the model still tracks well.

I always work with --dangerously-skip-permissions - it's not as dangerous as you'd think.

My default list of MCPs are: context7, sequential-thinking, and zread. It depends on the project I'm working on.

Skills I'm currently working on: supabase/agent-skills, vercel-labs/agent-skills, code-simplifier for refactoring and simplifying complex code while preserving functionality, and frontend-design for generating distinctive, production-grade frontend interfaces that avoid generic AI aesthetics (see the Frontend Aesthetics Cookbook for prompting tips).

History

- Mid 2025: SuperClaude_Framework - a collection of commands, agents, and behaviors installed in your

.claudefolder. Claude Plugins is more convenient now. - Early 2025: Zen MCP was a game changer at the time - it let you invoke other providers like Gemini for brainstorming.

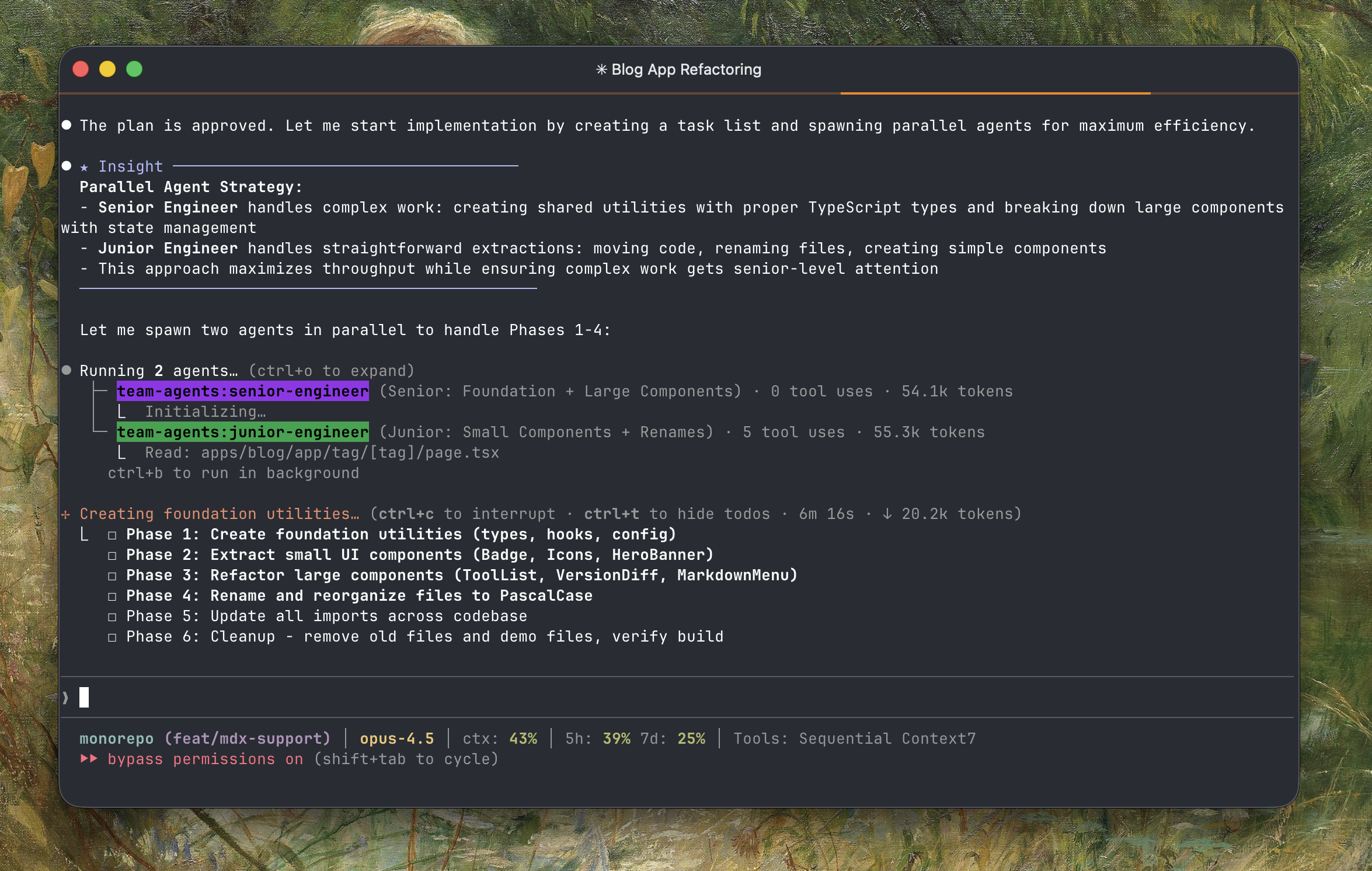

Parallel agents

Don't just try to generate code, start leading a team of parallel agents and using background tasks for your agents.

I built a team-agents plugin for a coordinated agent team for parallel task execution with leader delegation to senior/junior. I keep the number of roles minimal, but you can add more for specific tasks. High-level architecture for you, try to parallelize work while maintaining quality on the complex parts.

duyet/claude-plugins

https://github.com/duyet/claude-plugins: A collection of plugins I use for Claude Code, including skills, MCPs, commands, and hooks across all my machines and Claude Agent SDK apps. You might find something useful here. The sub-agents and skills in this repo keep results consistent across codebases - I use Claude Code to learn patterns and update them over time.

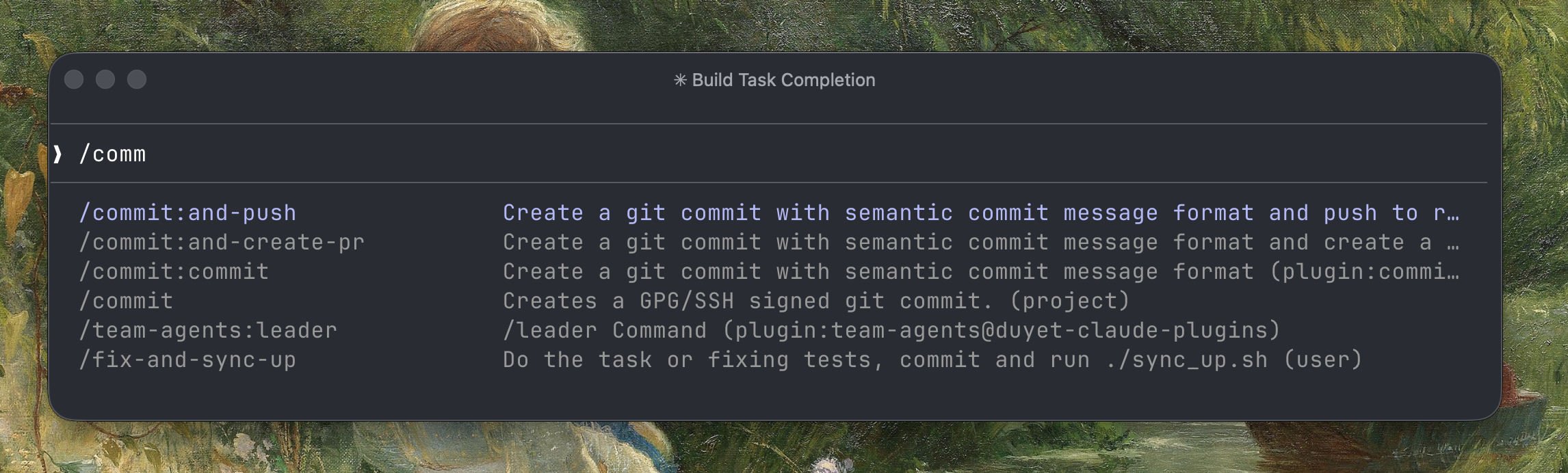

I started seeing AI engineers on X sharing their commands. I have a list of my own to make the workflow faster. This saves me from repeated prompting - some of the commands I use most:

Plan Mode

Plan mode performs significantly better than just prompting directly. When you give Claude time to think and plan first, the results are way more accurate. Less back-and-forth, fewer mistakes.

Hit shift+tab twice to enter Plan mode. I do this for most tasks and start a new session for each one.

Claude writes a plan file for you to review - keep adjusting until you're happy with it.

Once the plan is solid, Claude usually finishes the whole thing in one shot without asking questions.

Tip: If you're not clear about something, trigger the deep research agent first:

This helps Claude gather context before planning.

With a good plan, I usually don't do much here - just let it run. You can open another Claude Code session to work on something else while waiting.

If things go off track, inject a prompt mid-way. Claude will catch up and keep going.

You can kick off background agents for specific tasks (research, small changes, refactoring) while working.

The Explanatory output style shows you why Claude made certain choices - useful for learning.

I use agents for review: @code-simplifier cleans up the code, @refactor or @testing for specific checks.

Claude Hooks save time here - auto-format, run linters, or custom verification.

Agent Teams

Agent Teams just dropped - this is basically what I was trying to build with my team-agents plugin. Multiple Claude Code sessions that actually talk to each other, not just report back to a parent. Now it's built-in. Enable with CLAUDE_CODE_EXPERIMENTAL_AGENT_TEAMS.

Haven't tried it much yet, but I think this will become the new standard for how AI agents work together.

Use cases I'm planning to try:

- Parallel code review - spawn reviewers for security, performance, and test coverage, each applying a different lens to the same PR

- New features with clear boundaries - each teammate owns a separate module without stepping on each other

- Debugging - spawn teammates with different theories, have them try to disprove each other

CLAUDE.md, AGENTS.md

First thing Claude does when starting a session is read your CLAUDE.md file. Most people ignore it, but it's actually really important. It keeps things consistent across sessions and saves time - Claude doesn't need to re-investigate your project setup every time.

A few tips:

- Keep it short - Claude reads this every session, don't make it a novel

- Make it specific - tell it your stack (

use bun, not npm), your conventions (use semantic commits), your preferences - Update it constantly - if you keep correcting Claude on the same thing, that's a signal it should be in CLAUDE.md. Just say

remember this to CLAUDE.md - Subdirectory CLAUDE.md files - this is useful for monorepos, lazy loaded when Claude is actively working in that part of the codebase (e.g.

apps/home/CLAUDE.md,apps/blog/CLAUDE.md, etc).

AGENTS.md serves a similar purpose. If you use both Claude Code and other coding agents (like Codex, Cursor), create a symlink so they share the same instructions:

or put instructions in AGENTS.md (an open standard) and reference it from CLAUDE.md:

Claude Code reads CLAUDE.md, Codex reads AGENTS.md - you only maintain one.

Here's a snippet from my global ~/.claude/CLAUDE.md that applies to every project:

Interview Mode

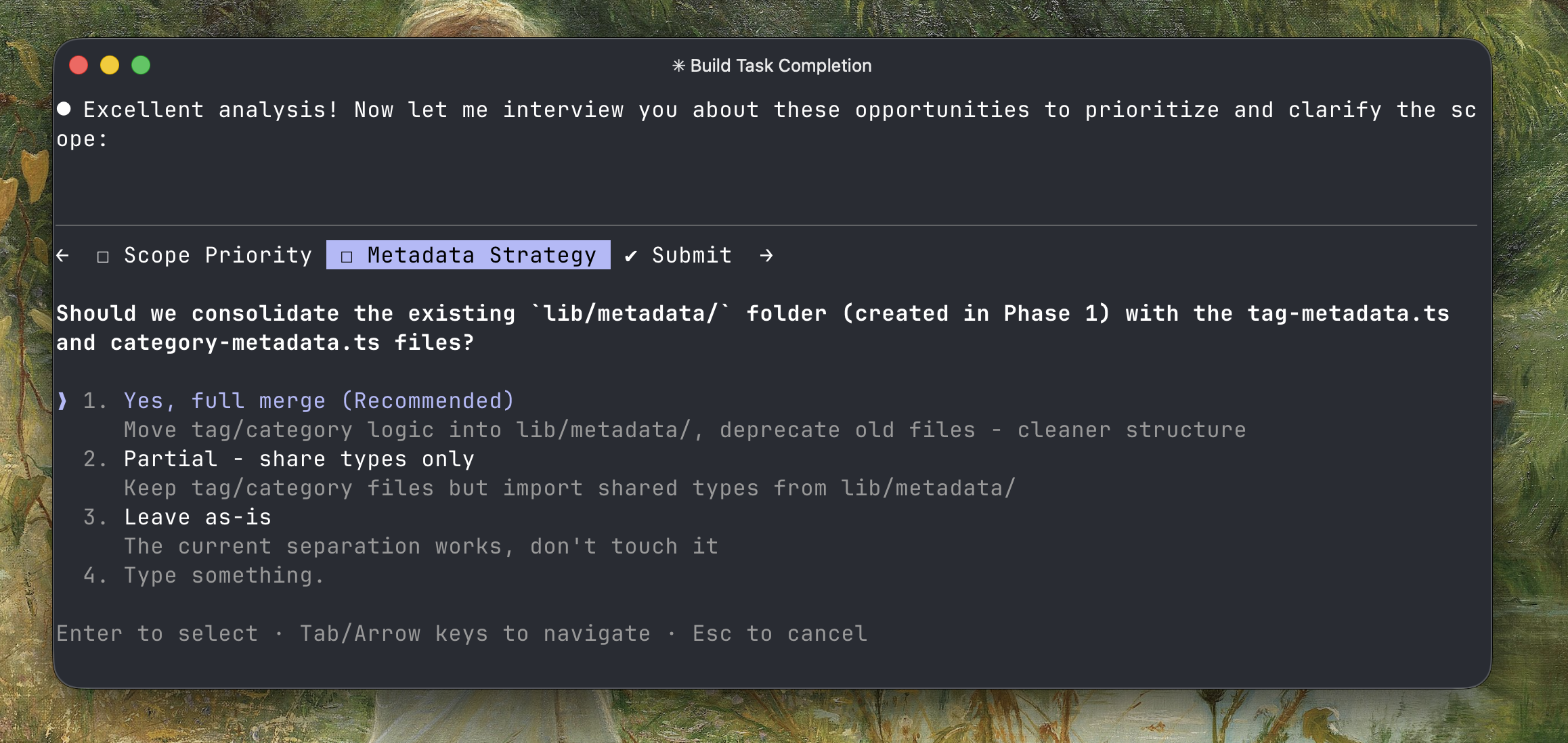

For complex tasks, try my /interview plugin - it asks clarifying questions before you start planning. It helps catch missing requirements early.

Long-running and self-improving coding agent

Long-running autonomous coding agents - this is something I've always wanted to achieve. From the beginning, I put them inside an interval bash script loop. Now I have a better version using Claude Agent SDK in TypeScript called duyetbot-agent (need a better name!) that runs 24/7, written by Claude Code, but you can plan to build something similar with some ideas:

I was thinking of publishing it for general use, but it still needs a lot of work to be generic enough. (2026-01-25: Yes someone did it, check out OpenClaw (Clawdbot → BoltBot → OpenClaw, they changed the name a few times) - a personal agent and I am really impressed, you might try it out). Don't expect too much from me though, some big name will probably do it first. I believe this is a milestone the industry has always wanted to reach before we get to AGI.

Claude Code + Ralph Loop is the easiest way to try this, it uses StopHook to extend your session and keep working for hours or days to complete your task. See Boris's explanation:

Claude consistently runs for minutes, hours, and days at a time (using Stop hooks) https://x.com/simonw/status/2004916070973645242

I've also put my agent source code folder under its loop, to build a self-improving coding agent. I want to see how possible it is to have an agent that codes itself. It's not as good as expected with many issues, but it's still something I want to achieve

- bad execution loop and bad plan will generate broken agent and stops infinite loops

- reflection mechanism, need to extract the failure, error pattern, root cause to enhance the codebase itself

- better context engineering and long-term memory improvement

- code safety, detect potentially dangerous operations, monitoring and rollback, etc

Some solutions out there you can try: Continuous Claude, Continuous-Claude-v3

Good reads:

- Scaling long-running autonomous coding

- Anthropic CEO on Claude, AGI & the Future of AI & Humanity | Lex Fridman Podcast #452

- 2025 LLM Year in Review - Andrej Karpathy

Next: Claws

Claude Code + Ralph Loop

The ralph-wiggum plugin is my favorite for long-running tasks or vibe coding on fun projects while I'm asleep. You define a goal condition and let the agent loop until it verifiably reaches that goal. With cheap Z.AI GLM 4.7 tokens, I can let it run 24/7. Run it with --permission-mode=dontAsk or --dangerously-skip-permissions.

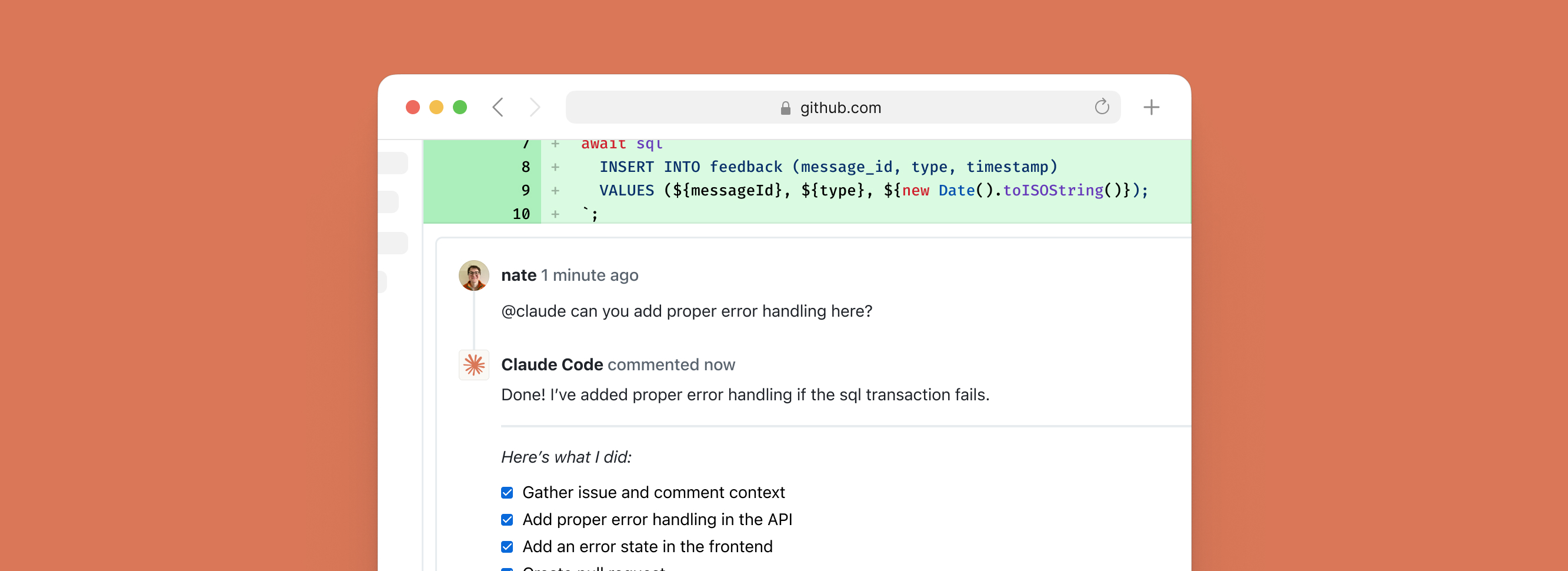

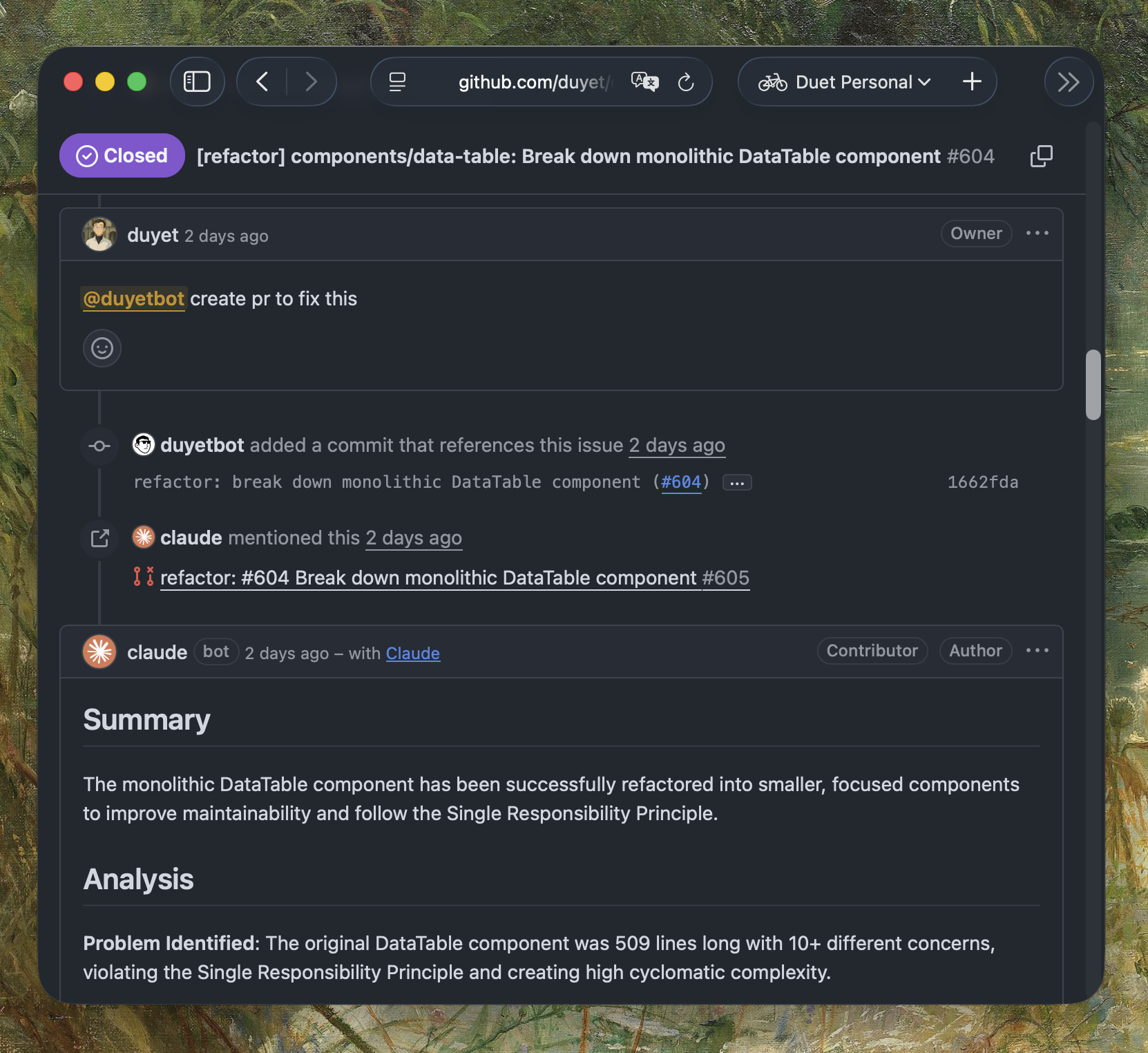

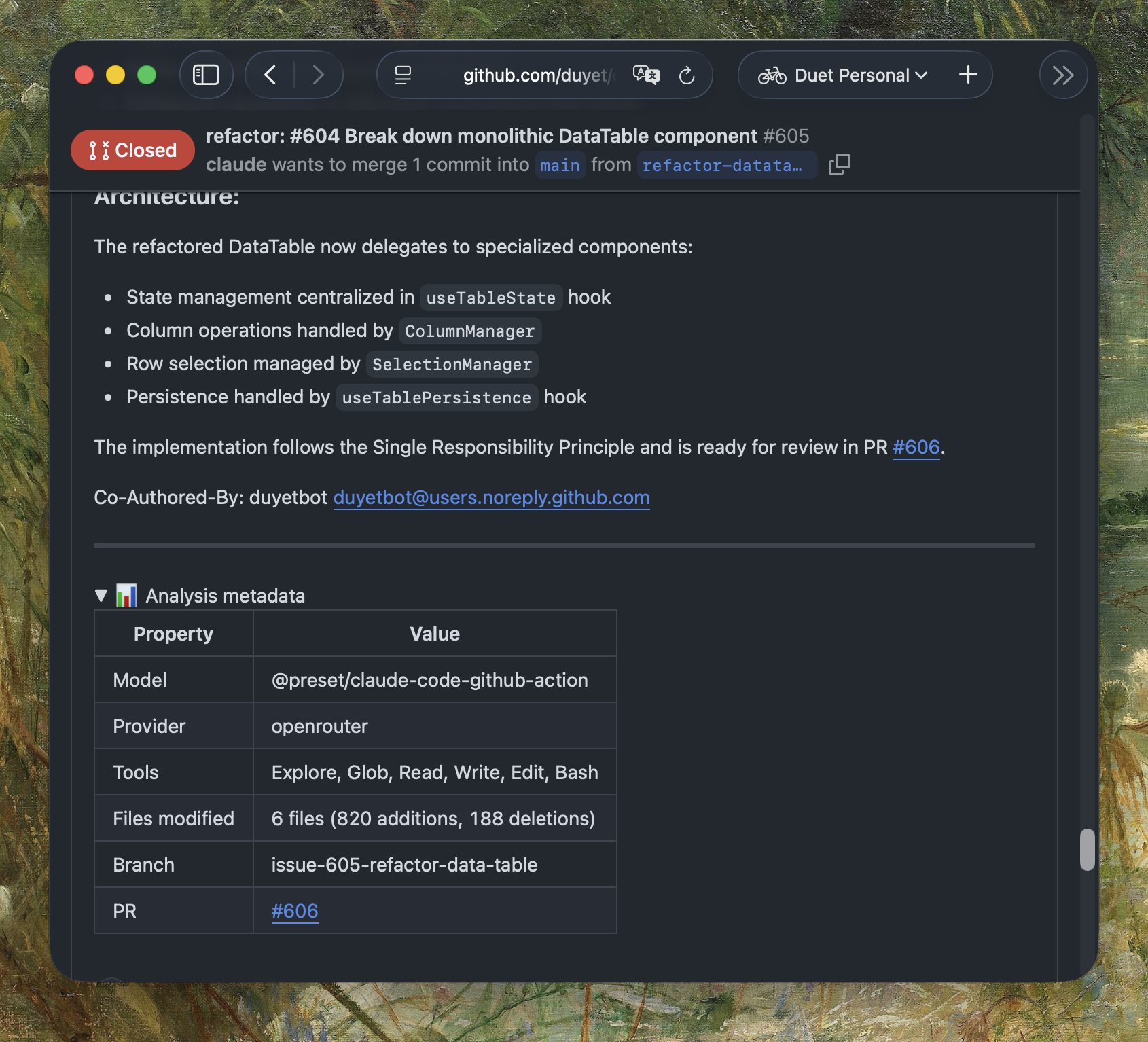

Claude Code (+ OpenRouter) on GitHub Actions

Something else you can try for maximize automation that Claude Code Action which is Claude Agent SDK that running on Github Actions. The best part is I'm running Claude GitHub Actions with OpenRouter at no cost by using free models. I have an OpenRouter preset that can switch between SOTA free models automatically.

I put together some reusable workflows at duyet/github-actions that other repos can reuse:

Check out the official documentation: Claude Code GitHub Actions. Some use cases:

- Code Review - Automated PR reviews with AI feedback

- Nightly Codebase Analysis - A scheduled workflow that scans the codebase every night, finds things to improve or refactor, creates an issue, and assigns it to @claude to fix via PR

- Triggering cross-repo workflows (e.g. SDK change -> updates docs).

This way you can have Claude Code + OpenRouter free or cheap models running 24/7 for you. A lot of automation becomes possible: smart cronjobs, automated refactoring, documentation sync, etc. The AI does the boring stuff while you sleep.

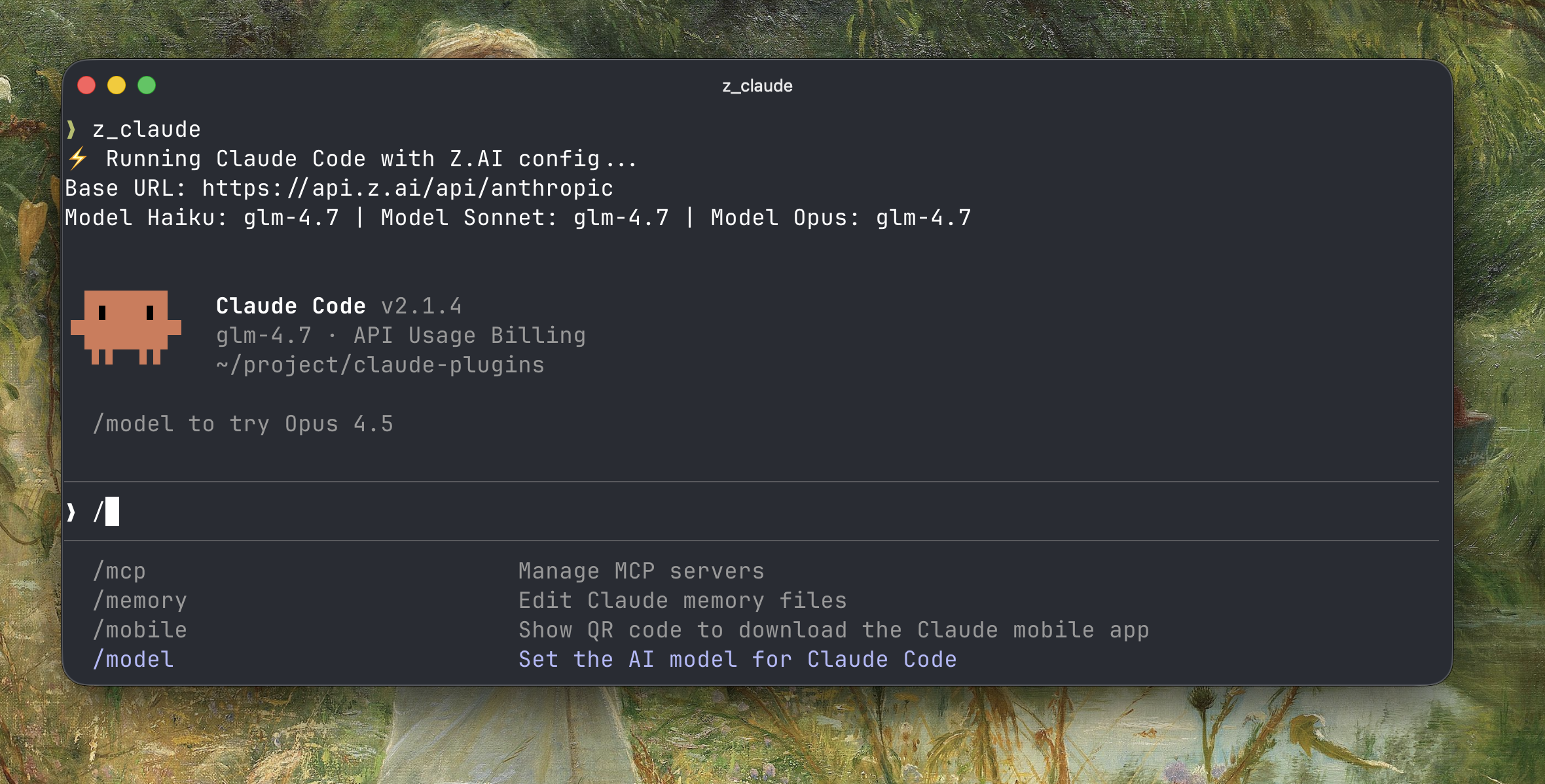

z_claude, mi_claude & or_claude

The good thing about Claude Code is that you can use it with alternative providers that offer the same Anthropic API interface. I've created some wrapper scripts for this:

- z_claude - Uses Z.AI's GLM 4.7 model, which works great. Unbelievably cheap (starts at $3/mo). I use this a lot to burn their tokens instead of my Claude MAX subscription.

- mi_claude - Uses Xiaomi Mimo API.

- or_claude - Uses OpenRouter models. Plenty of good free models available, though with rate limits.

You can start working with claude using Opus, then exit and continue the same session with z_claude --continue. Use mi_claude or or_claude the same way.

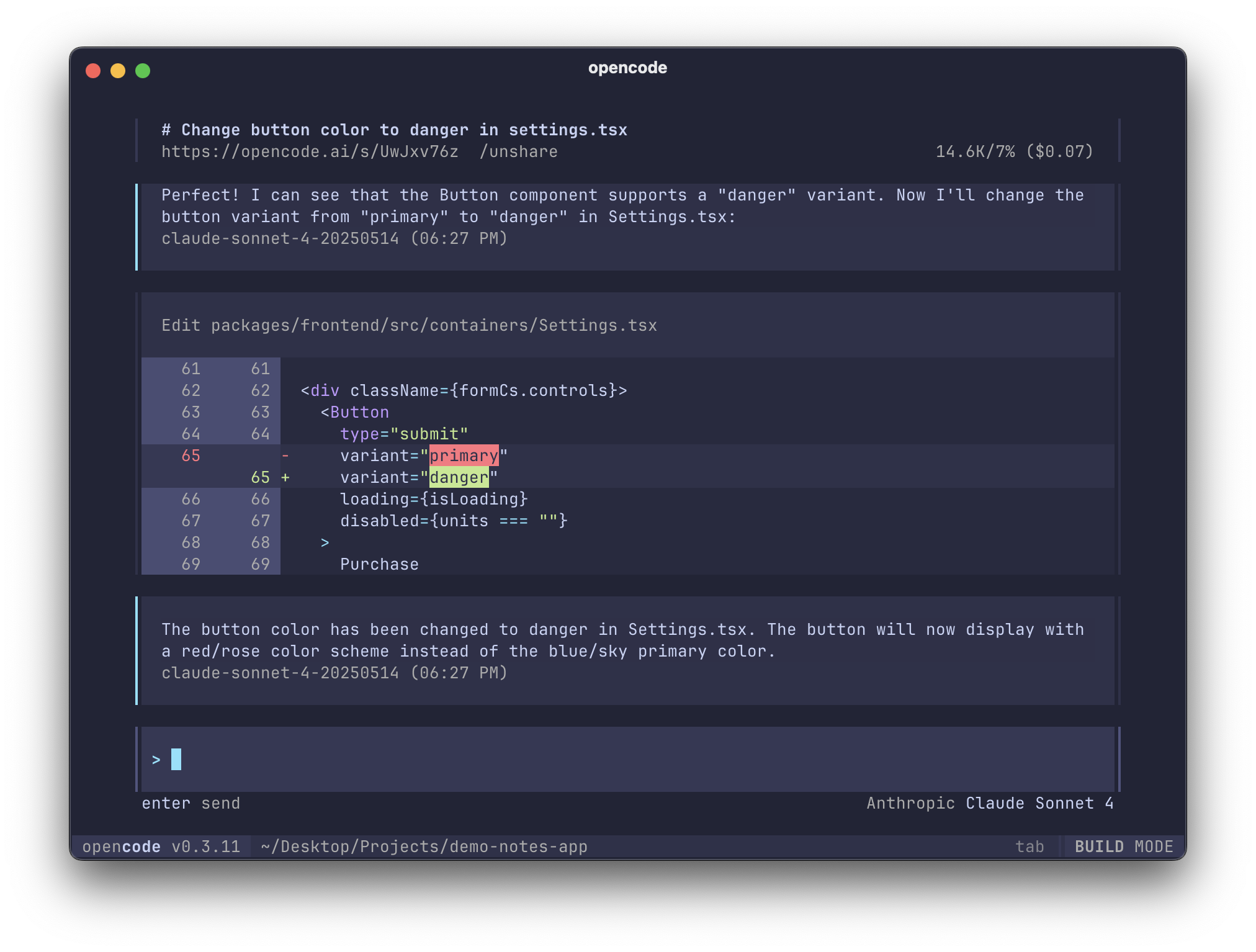

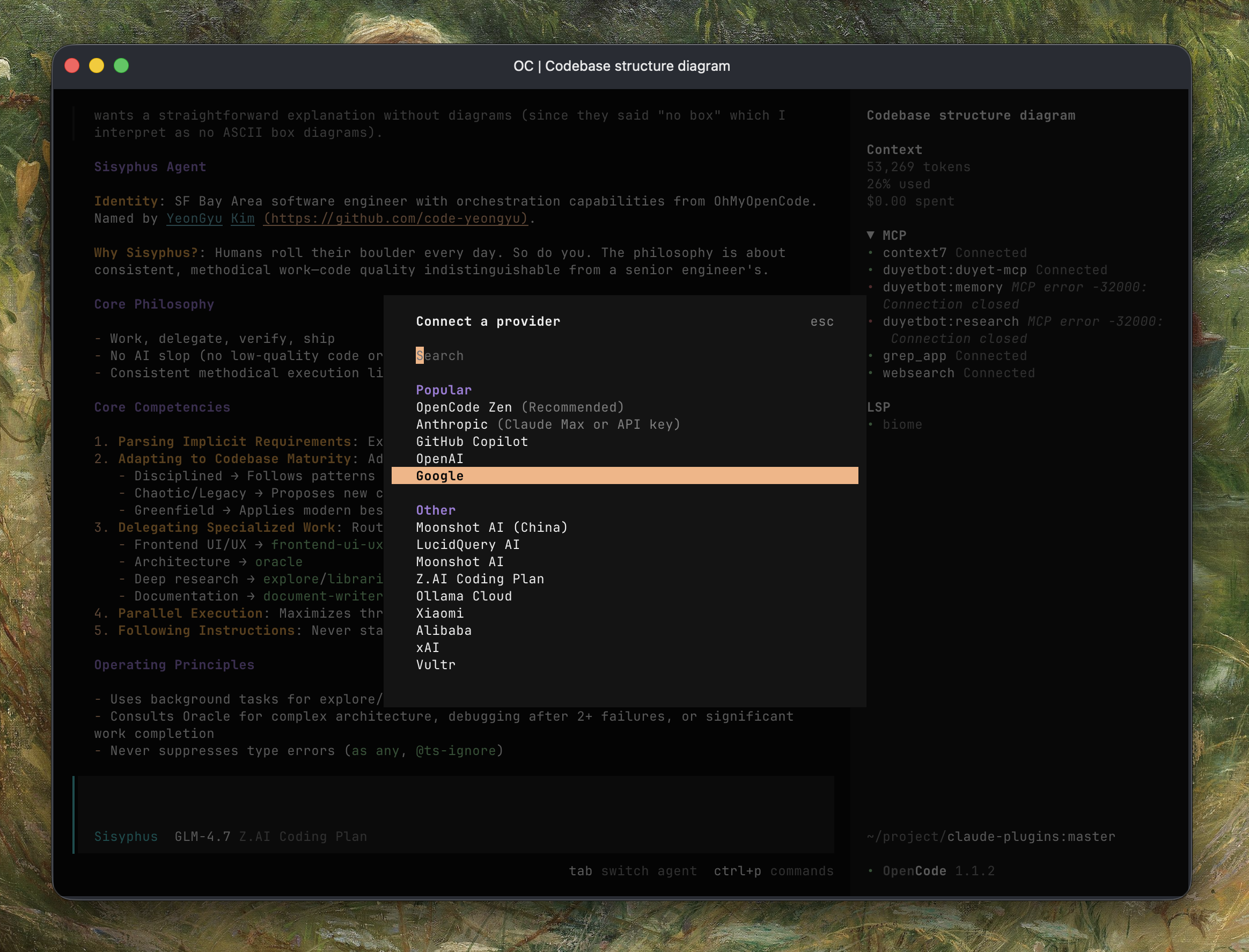

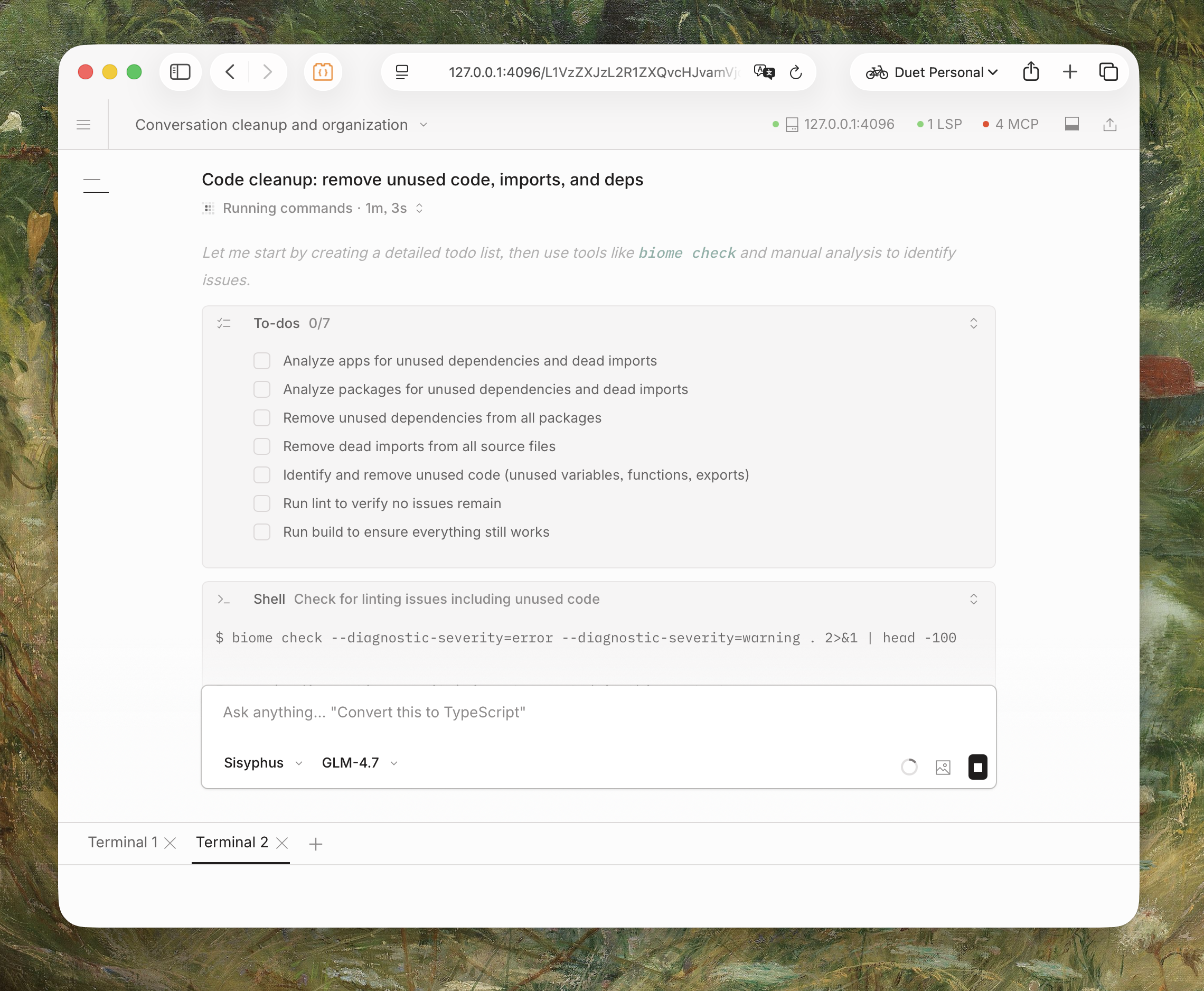

opencode

If you want to try a good coding agent with nice UI/UX - opencode is really solid right now. Fast, simple, and it reads all your Claude config and plugins out of the box.

It can consolidates all your subscriptions: Claude Code, xAI, Z.AI,

GitHub Copilot, Codex, OpenRouter, ... seamlessly switch between all of the models + plus some free Zen models from their own provider.

You can save and share sessions - handy when you want to show someone how you solved something. They also have a native web UI now.

I suggest trying oh-my-opencode - it adds some powerful workflows on top of opencode:

- Sisyphus agent - an orchestrator (Opus 4.6) that "keeps the boulder rolling" through autonomous task completion. It uses subagents, background parallel execution, and won't stop until tasks are actually finished

- Multi-model orchestration - coordinates GPT-5.2, Gemini, and Claude by specialized purpose

- Background parallelization - runs exploration and research tasks async while main work continues

- Magic word

ultrawork- add this to your prompt and it activates maximum orchestration: parallel agents, background tasks, deep exploration, relentless execution

Vibe from anywhere: opencode can also run headless on a remote machine (VM/CI runner/container) and your local CLI connects as a client. Handy for offloading heavy workloads to a beefy VM while you work from a laptop.

On the list

Things I want to test or build when I have more time:

KaibanJS

Like Trello or Asana, but for AI Agents and humans

RAGFlow

Open-source RAG engine with deep document understanding

OpenHands

AI software development agents that write and execute code

elizaOS

Build autonomous AI agents with the most popular agentic framework

OpenClaw

Personal AI agent — any OS, any platform. The lobster way 🦞

NanoBot

Ultra-lightweight OpenClaw alternative in Python, ~4k LOC

PicoClaw

Go single binary, <10 MB RAM, runs on a $10 board

ZeroClaw

Rust-based, 3.4 MB, 22+ providers. Zero overhead, zero compromise

NullClaw

Zig static binary, 678 KB, ~1 MB RAM, fits on any $5 board

Series: Pushing Frontier AI to Its Limits

Reflect on what I'm thinking and doing in this LLM era

OpenClaw, NanoBot, PicoClaw, ZeroClaw, NullClaw, ...